Reality check: OpenTelemetry is not going to solve your observability woes

These days in the observability world it seems like OpenTelemetry is everywhere. Almost every vendor speaks about OTel compatibility, it’s a common topic at conferences like Monitorama and Observability Day, and by velocity it’s one of the most popular CNCF projects. The sentiment around this project, at least as heard by numerous thought leaders (largely employed by observability vendors), boils down to: adopting OTel will fix your observability woes. Get on board and don’t miss out! In this post I’m going to talk about what OTel is and is not, and argue that while OTel standardizes instrumentation and telemetry collection, it fails to address the root causes of high observability costs and may even exacerbate them. Let’s go!

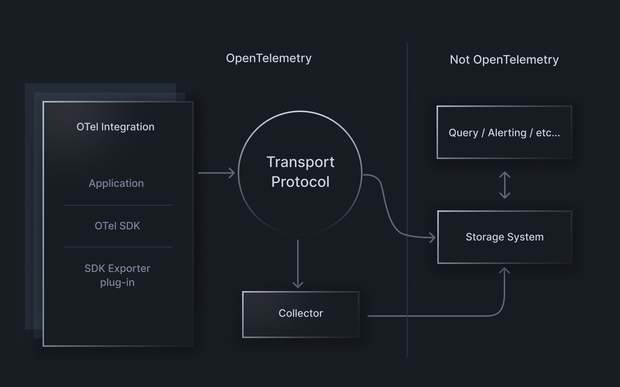

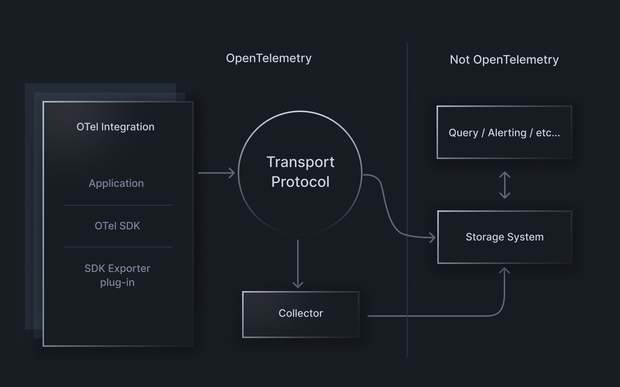

OpenTelemetry is a very large project encompassing a broad corpus of technology, and there is quite a bit of misconception among end-users about what OTel actually is. Let’s start by first breaking down OTel into its three major components:

OpenTelemetry is a very large project encompassing a broad corpus of technology, and there is quite a bit of misconception among end-users about what OTel actually is. Let’s start by first breaking down OTel into its three major components:

What is OpenTelemetry?

- Language/ecosystem telemetry generation SDKs and abstractions: this part of the project provides per-language SDKs that enable users to create logs, metrics, and trace spans in a vendor agnostic way. It additionally provides out of the box telemetry signals for common frameworks (we vendors like to call this OOTB or zero code telemetry.) The most important part of this component is that there is an abstraction between the language APIs used to generate telemetry and the vendor/system specific transport of that telemetry to storage, analysis, and display systems. This is accomplished via exporter plugins that live inside the higher level language APIs.

- The collector/observability pipeline ecosystem: The OTel collector is a component that is meant to multiplex many different protocol sources to many different protocol sinks, potentially performing transformations along the way. In practice, it is mostly identical to similar tools like fluentbit, Vector, Telegraf, etc. The only difference being that it is ostensibly vendor neutral. These tools are generally deployed by infrastructure teams and hidden from application teams.

- Standard telemetry transport protocols: this part of the project provides a standard committee defined transport API for telemetry, known as OTLP. The idea behind a standard protocol is that compliant telemetry pipeline and ingestion systems no longer have to provide proprietary transport mechanisms and can theoretically utilize a common standard protocol. For distributed tracing, this is particularly important such that there exists a standard for context propagation between different systems.

What problems is OpenTelemetry trying to solve?

Stepping back, let’s talk about why OpenTelemetry exists. What problems is it trying to solve? Historically, OpenTelemetry has origins in two older projects, OpenCensus and OpenTracing. All of these projects share a few common high level goals:- Make it easier for end-users to generate metric, log, and trace telemetry in their applications, and when possible generate this telemetry for them by default (zero code / OOTB telemetry).

- Break vendor lock-in by at least theoretically allowing an end-user to trivially repoint their telemetry production to a different vendor.

How do OpenTelemetry goals map to common observability woes?

Stepping back even further, let’s detour into what I view as the largest observability pain points that end-users are facing right now.By far and away the largest problem that end-users are facing right now is that observability costs are out of control, especially as weighted as a percentage of overall infrastructure spending and perceived ROI.

- By far and away the largest problem that end-users are facing right now is that observability costs are out of control, especially as weighted as a percentage of overall infrastructure spending and perceived ROI. Users are paying for data that they never use, and at the same time still finding that they don’t have the data they need to solve customer problems. This situation is exacerbated by the industry moving towards microservices and containerized workloads, exploding the volume of data that needs to be generated to operate these systems reliably.

- Effective instrumentation, whether via logs, metrics, or traces, is challenging for those not familiar with the practice. Common patterns and out of the box instrumentation can help get up to speed more quickly.

- Vendor lock-in is a real issue insofar as users may be at the mercy of their existing vendor during contract negotiations, whether over contract price, the reliability of the vendor solution, or both. Unpredictable pricing, when data volumes soar due to usage spikes (legitimate or accidental) is one of the most common grievances among budget owners of observability tooling.

- In the mobile/RUM space in particular, observability goals are very poorly served by the traditional “3 pillars” of logs, metrics, and traces. This is particularly frustrating as what is the point of observability if we cannot understand the true user experience of the customers actually using our applications? How many times have our backend systems had a 99.99% success rate only to have every response crashing a particular version of our mobile application?

Where does OpenTelemetry help and fall short?

With the background out of the way, let’s get down to it. Where does OTel help? Where does it fall short? If an organization undertakes the possibly large effort to migrate to an entirely OTel compliant infrastructure will that investment be worth it and help with the common observability woes listed in the previous section?Cost reduction

As I discussed at length in my post on the observability cost crisis, most vendors charge by volume with little to no concern for how useful the ingested data is. For the “logging industrial complex,” more data equals ever increasing contract sizes as well as the notorious overage bills that have become the norm when contract limits are breached. As such, while OTel making it easier for end-users to generate voluminous telemetry is nice in theory for data completeness, it turns out it’s not so nice on the wallet. OTel absolutely falls short and will not help whatsoever with cost reduction and increasing observability ROI. In fact, because OTel will make it easier to produce more telemetry (see next section), adopting OTel may in fact make your cost problems substantially worse.For the “logging industrial complex,” more data equals ever increasing contract sizes as well as the notorious overage bills that have become the norm when contract limits are breached.

Creating broad OOTB and high quality instrumentation

Setting aside the cost concern (it’s hard, but let’s pretend briefly that we all have infinite money), OTel does absolutely raise the telemetry bar across the industry. A common set of APIs and SDKs makes it much more likely that platforms and libraries will be pre-instrumented, meaning that out of the box observability should be vastly increased. Further, a common set of APIs and SDKs mean that there will be more quality examples for everyone to learn from when looking to implement custom telemetry. The context propagation required for effective distributed tracing is particularly challenging to get right across different languages and ecosystems. To be clear, “more” does not necessarily equate to “quality,” but I would still argue that industry experts collaborating on a high quality OOTB base does help with education and is a step towards raising the bar for everyone involved.Vendor lock-in

Vendor lock-in is trickier to analyze. Theoretically, easier switching will lead to greater competition and lower end-user prices for the same functionality. In practice, end-users still often wind up with significant lock-in due to the intricacies of how different systems are able to deal with different data input shapes, not to mention lock-in on the visualization, query, alerting, etc. side of the equation. I have not yet personally seen any substantial benefits of standardization on lock-in.Mobile/RUM observability

OTel on its own does little to help solve the significant challenges inherent in mobile observability. More work on mobile language specific SDKs and automatic instrumentation will however help raise the base instrumentation bar as it has done and will continue to do elsewhere.Does the OpenTelemetry lowest common denominator stifle more innovation?

As I mentioned in the introduction explaining what OTel is, it’s very important to understand that OTel has made no major advancements as of yet to the fundamentals of how telemetry is collected or sent to backend systems. Fundamentally, applications emit logs, metrics, and trace spans, and these get sent en masse to backend systems for processing, storage, and query. In some sense, this is inevitable. OTel is meant as a broad tent that can pull in many different existing technologies. The API/SDK side of OTel has been built by consensus amongst numerous stakeholders, and as such, it is inherently the lowest common denominator of a broad set of technologies. If one believes that how we have approached observability for the last 30 years is the be-all and end-all of the practice, perhaps this design by committee approach is fine. Cynically, it’s even in the best interest of “legacy” vendors who would prefer that we keep sending as much data as possible for the privilege of maybe using it one day (I like to call this the “logging industrial complex”). However, as I discussed in my posts on whether you really need all that telemetry and what you can do with 1000x the telemetry at 0.01x the cost, I am not one of those people. I firmly believe that by taking a fresh look at how observability is produced and consumed we can fundamentally change how we interact with our observability systems. Design by committee and sticking to the lowest common denominator of existing technology is not going to get us there.The future of OpenTelemetry

From my perspective, by far the most valuable part of OTel is the standard set of APIs and SDKs that raise the bar on telemetry production across the industry. I believe we must make it as easy as possible for end-users to effectively instrument their applications and systems. The more we can do for them out of the box, the better. The problem, as described previously, is that forcing a larger volume of data into legacy pipelines via design by committee lowest common denominator OTLP is not ever going to solve the cost crisis that is plaguing the industry and leaving end-users with a poor taste about the overall experience they are getting. Only real innovation in how we approach telemetry collection and transport in the first place is going to materially change the end result. I am clearly biased and believe local storage with a control plane is the path forward, but I’m sure smart people will come up with other possible approaches and should not be constrained by an effectively already legacy transport protocol.forcing a larger volume of data into legacy pipelines via design by committee lowest common denominator OTLP is not ever going to solve the cost crisis that is plaguing the industry and leaving end-users with a poor taste about the overall experience they are getting.As such, if I were to offer one recommendation to the OTel project, it would be to split the three parts of the project (SDKs/APIs, collector, and OTLP transport) into three discrete efforts, and make it substantially more clear to end-users that these are separate efforts with separate audiences and outcome goals. This would increase the benefit and clarity for end-users who primarily care about end-user facing interaction with the system, and leave transport as a vendor-only implementation concern. Regarding OTLP, I would personally love to collaborate with interested folks in the industry to add aspects of dynamic telemetry production directly to both the protocol and SDKs — effectively “upstreaming” a lot of the novel work we have done building bitdrift’s telemetry control plane and Capture SDK. This work would lead the way towards providing the benefits of dynamic real-time telemetry to more end-users, albeit at the expense of more vendor work (a worthwhile tradeoff!). The future of observability should be substantially richer telemetry for all, including mobile/RUM, at a non-eye-watering price point. OpenTelemetry is part of that puzzle, but in its current form is stifling innovation in favor of the logging industrial complex, and erroneously inflating its ability to fix known observability woes within the industry. Onward!